Impact of Artificial Intelligence

In order to get a merit grade for this external exam you will need to talk about some of the impacts of Artificial Intelligence.

We already know that in 2024 it will focus on two key areas:

You will be required to pick one of these areas in Artificial Intelligence and discuss it by answering the questions below.

Questions that were asked in the 2023 exam:

Questions that could be asked in 2024:

We already know that in 2024 it will focus on two key areas:

- Policies

- AI Hallucinations

You will be required to pick one of these areas in Artificial Intelligence and discuss it by answering the questions below.

Questions that were asked in the 2023 exam:

- How can the impact of human factors be considered when developing artificial intelligence for self-driving cars?

- What are the potential positives of future-proofing an artificial intelligence?

Questions that could be asked in 2024:

- How can the impact of human factors be considered when developing policies for AI generating pictures or text?

- What are AI Hallucinations? What future-proofing techniques could be used to minimize AI hallucinations?

Human Factors/Ethical Considerations/Sustainability/Social Impact

Computers are usually predictable and have predictable patterns, they can follow rules exactly. Humans however are largely unpredictable, they can generally follow rules but struggle to be exact.

Humans can be malicious, illogical, emotional, bad decision makers and rebellious. These traits can also be a positive and are not all negative.

This can impact A.I. in a manner of different ways. In this section we will give some examples, but your job is to research how humans impact A.I. programming.

Humans can be malicious, illogical, emotional, bad decision makers and rebellious. These traits can also be a positive and are not all negative.

This can impact A.I. in a manner of different ways. In this section we will give some examples, but your job is to research how humans impact A.I. programming.

Maliciousness - Human Factors

|

Road Safety

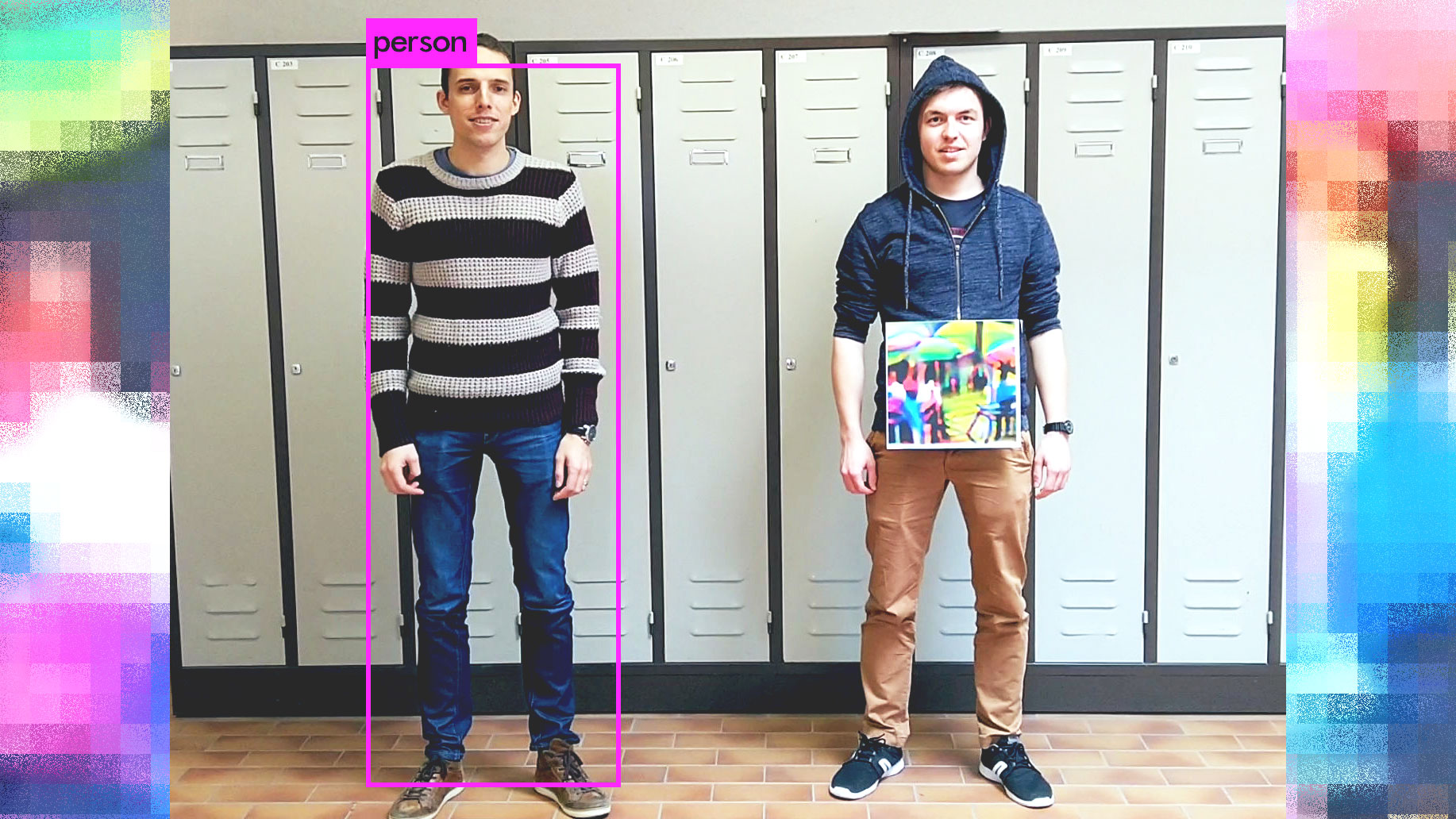

This article discusses how using a laser pointer can be used to trick driverless cars into seeing red traffic lights as green. https://www.newscientist.com/article/2315634-driverless-cars-can-be-tricked-into-seeing-red-traffic-lights-as-green/ Corrupted Bots Microsoft created a chat bot for use in twitter. Malicious twitter users targeted it and managed to teach it inappropriate language https://www.reuters.com/article/us-microsoft-twitter-bot-idUSKCN0WQ2LA Tricking Surveillance Cameras A.I. Is being used in order to track individuals and number plates... But humans learn ways in which to fool them https://www.theguardian.com/world/2019/aug/13/the-fashion-line-designed-to-trick-surveillance-cameras |

Bad Decision Making - Human Factor

|

Self Driving Cars - Bad Humans

Self driving cars obey all the time without exception. This causes humans to crash into them. https://driving.ca/auto-news/news/humans-crashing-into-driverless-cars-are-exposing-a-key-flaw Dealing with randomness: When creating A.I. you might have to acknowledge the randomness that occurs https://www.techrepublic.com/article/dont-forget-the-human-factor/ Training an A.I to learn how you want to ideologically. In today's ideological climate, some loud academics refer to race and gender as social constructs. Meaning they don't exist and they are made up by humans. This causes problems when the A.I. looks at the facts and decides otherwise: https://www.wired.com/story/these-algorithms-look-x-rays-detect-your-race/ It is also a problem when it learns from others perceptions: https://www.vox.com/future-perfect/22672414/ai-artificial-intelligence-gpt-3-bias-muslim 90% of CEO's are Men... should a google image search reveal mostly men, if you type in CEO to google images? https://www.vox.com/future-perfect/22916602/ai-bias-fairness-tradeoffs-artificial-intelligence A.I's being able to tell someone's biological sex with face recognition: https://www.vox.com/future-perfect/2019/4/19/18412674/ai-bias-facial-recognition-black-gay-transgender |

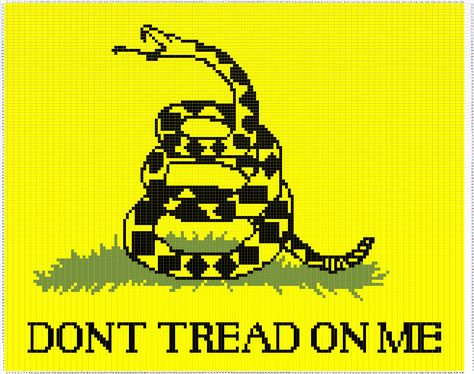

Rebellion and Freedom - Human Factor

|

Some humans like their freedoms and liberties,

A.I could inhibit that: https://nationalinterest.org/blog/reboot/how-does-artificial-intelligence-destroy-our-freedoms-166771 And A.I can represent a threat to freedoms and liberties: https://thinkml.ai/is-artificial-intelligence-a-threat-to-privacy/ This leads people into thinking about ways to trick A.I. https://analyticsindiamag.com/how-to-fool-ai-with-adversarial-attacks/ |

Future Proofing

Future proofing is protecting A.I. or your businesses from changes that happen in the future.

Think about the rise and fall of bitcoin and predicting marketing trends in terms of popular movie series...

How can A.I help with that:

https://aiforum.org.nz/2021/05/26/ai/

https://www.braincreators.com/brainpower/insights/how-ai-can-future-proof-your-business

We also need to think about which jobs are replaceable and what skills we need to focus on, now that A.I can do a lot of them for us:

https://www.impact.acu.edu.au/career/future-proofing-your-career-ai-and-the-rising-importance-of-soft-skills-for-graduates

A.I. Policies

AI policies are rules that tell us how to make and use artificial intelligence in a fair and safe way. They make sure AI is created responsibly, respects privacy, treats people fairly, and is transparent about how it works. These policies are like guidelines to make sure everyone plays by the same rules when it comes to AI. (Chat GPT Generated)

I want us to consider the following situations when it comes to A.I.AI policies are rules that tell us how to make and use artificial intelligence in a fair and safe way. They make sure AI is created responsibly, respects privacy, treats people fairly, and is transparent about how it works. These policies are like guidelines to make sure everyone plays by the same rules when it comes to AI.

|

spectrum.ieee.org/in-2016-microsofts-racist-chatbot-revealed-the-dangers-of-online-conversation

Tay bot was a chat bot released on Twitter Within 24 hours it transformed into a racist, sexist, homophobic bot before it had to be shut down. |

www.theguardian.com/world/2023/aug/10/pak-n-save-savey-meal-bot-ai-app-malfunction-recipes

What were people doing in order to break the system, how did the system break. |

Chat bots and image bots can be liable to all sorts of terrible uses. If there were no policies made for A.I. People could figure out how to get recipes for bombs, easily commit crimes etc.

With A.I. we have external policies and Internal policies:

Here is some ChatGPT text on the issue:

With A.I. we have external policies and Internal policies:

Here is some ChatGPT text on the issue:

- Why AI Needs Policies:

- Ensure Ethical Use: AI can make important decisions affecting people's lives, such as in healthcare or hiring. Policies ensure AI is used ethically, fairly, and without bias.

- Protect Privacy: AI often relies on large amounts of data. Policies are needed to protect people's privacy and ensure their data is used responsibly.

- Promote Transparency: AI algorithms can be complex and sometimes make mistakes. Policies ensure that AI systems are transparent about how they work, so people can understand and trust them.

- Address Bias: AI systems can unintentionally reflect biases present in their training data. Policies aim to identify and mitigate bias in AI systems to ensure fairness and equality.

- Foster Innovation: Clear policies can provide guidelines for developers, researchers, and businesses, fostering innovation while also ensuring safety and responsibility.

- What AI Policies Might Cover:

- Ethical Guidelines: Establish principles for the ethical development and use of AI, including fairness, transparency, accountability, and respect for human rights.

- Data Governance: Define rules for collecting, storing, sharing, and using data in AI systems to protect privacy, ensure security, and prevent misuse.

- Algorithmic Transparency: Require AI systems to be transparent about their decision-making processes and provide explanations for their outputs to promote accountability and trust.

- Regulatory Frameworks: Develop laws and regulations to govern AI technologies across various sectors, addressing issues such as safety, liability, and compliance with existing laws.

- International Collaboration: Foster cooperation among countries to harmonize AI policies, share best practices, and address global challenges posed by AI technologies.

A.I. Hallucinations

|

"AI hallucination is a phenomenon wherein a large language model (LLM)—often a generative AI chatbot or computer vision tool—perceives patterns or objects that are nonexistent or imperceptible to human observers, creating outputs that are nonsensical or altogether inaccurate."

Copied from: https://www.ibm.com/topics/ai-hallucinations Translation from Chat GPT: AI hallucination is when a smart computer, like a chatbot or a tool that recognizes things in pictures, sees things that aren't really there. It might think it sees patterns or objects that people can't see, which leads it to make weird or wrong stuff. Give this a thought: (…) The Army trained a program to differentiate American tanks from Russian tanks with 100% accuracy. Only later did analysts realized that the American tanks had been photographed on a sunny day and the Russian tanks had been photographed on a cloudy day. The computer had learned to detect brightness. (…) https://matej-zecevic.de/2021/255/fallacies-in-AI/ This is an urban legend, but it does bring on the reason why hallucinations happen. |

Many Fingered Hands

A good example of AI hallucinations are how A.I. creates hands. As it recognizes pattens that are non-existent, it then uses those patterns to create objects that don't exist. |

Ask Chat GPT:

Here is what CHAT GPT said about helping with hallucinations:

Here is what CHAT GPT said about helping with hallucinations:

- Diverse and Representative Training Data: Ensure that AI models are trained on diverse and representative datasets to reduce biases and improve generalization. This can help prevent hallucinations caused by overfitting to specific patterns in the training data.

- Regular Testing and Validation: Continuously test AI models on new and diverse datasets to identify potential hallucinations and errors. Implement robust validation procedures to ensure that AI outcomes align with expectations and real-world observations.

- Adversarial Testing: Conduct adversarial testing to assess the robustness of AI models against deliberate attempts to induce hallucinations or errors. This involves intentionally introducing subtle perturbations or variations in input data to evaluate the model's response.

- Interpretability and Explainability: Enhance the interpretability and explainability of AI models to better understand their decision-making processes and detect potential sources of hallucinations. This can involve techniques such as model visualization, feature importance analysis, and attention mechanisms.

- Human-in-the-Loop: Incorporate human oversight and intervention into AI systems to provide feedback, correct errors, and verify outputs. Human-in-the-loop approaches enable humans to intervene when AI outcomes are uncertain or potentially erroneous, helping to mitigate hallucinations.

- Ensemble Learning: Employ ensemble learning techniques to combine predictions from multiple AI models and reduce the risk of hallucinations caused by individual model errors. Ensemble methods can improve robustness and reliability by leveraging diverse model architectures and training strategies.

- Post-Processing and Error Correction: Develop post-processing techniques to filter out hallucinations and refine AI outcomes. This may involve applying statistical methods, error correction algorithms, or context-based reasoning to identify and rectify erroneous predictions.

- Ethical and Regulatory Oversight: Establish ethical guidelines and regulatory frameworks to govern the development and deployment of AI technologies, ensuring that AI outcomes adhere to ethical principles, societal norms, and legal requirements. Ethical oversight can help mitigate risks associated with hallucinations and promote responsible AI innovation.

Task: Complete these questions in One Note

Answer the questions on OneNote:

Section C: Impacts

Think about the following impacts:

Define Human Factors:

What are some human factors to consider when Developing A.I?

How does this impact the development of A.I?

How does A.I have an impact on these Human Factors?

Define Future Proofing:

How does Future Proofing impact A.I?

How does this impact the development of A.I

How does A.I. Help with Future Proofing?

What are policies in terms of AI? Why are they important?

What are AI Hallucinations?

How can the impact of human factors be considered when developing policies for AI generating pictures or text?

What are AI Hallucinations? What future-proofing techniques could be used to minimize AI hallucinations?

Section C: Impacts

Think about the following impacts:

Define Human Factors:

What are some human factors to consider when Developing A.I?

How does this impact the development of A.I?

How does A.I have an impact on these Human Factors?

Define Future Proofing:

How does Future Proofing impact A.I?

How does this impact the development of A.I

How does A.I. Help with Future Proofing?

What are policies in terms of AI? Why are they important?

What are AI Hallucinations?

How can the impact of human factors be considered when developing policies for AI generating pictures or text?

What are AI Hallucinations? What future-proofing techniques could be used to minimize AI hallucinations?